Telcordia Sr 332 Handbook To Higher

ReliaSoft software applications provide a powerful range of solutions to facilitate a comprehensive set of reliability engineering modeling and analysis techniques. ReliaSoft products help:. Drive reliability improvement by design, both qualitatively and quantitatively, while infusing Design for Reliability (DFR) activities with relevant information that can be used for next-generation products. Use data-driven analysis to address today's demanding performance specifications, while capturing enterprise-wide knowledge within a self-improving, closed-loop system. Quickly discover design and process flaws before release to manufacturing while evaluating and improving design margins to reduce development time and cost. Accurately estimate system availabilities and maintenance schedules from field and test data to reduce field failures and system downtimes.

Forecast the system and component failures, estimate returns to reduce costs through better warranty modeling and spare part inventory management. Determine the reliability and availability of the system, resulting in reduced operating costs and minimized unscheduled downtime. Use advanced statistical methods to support management in financial decision planning.

Anto Peter, Diganta Das, and Michael Pecht This paper begins with a brief history of reliability prediction of electronics and MIL-HDBK-217. It then reviews some of the specific details of MIL-HDBK-217 and its progeny and summarizes the major pitfalls of MIL-HDBK-217 and similar approaches.

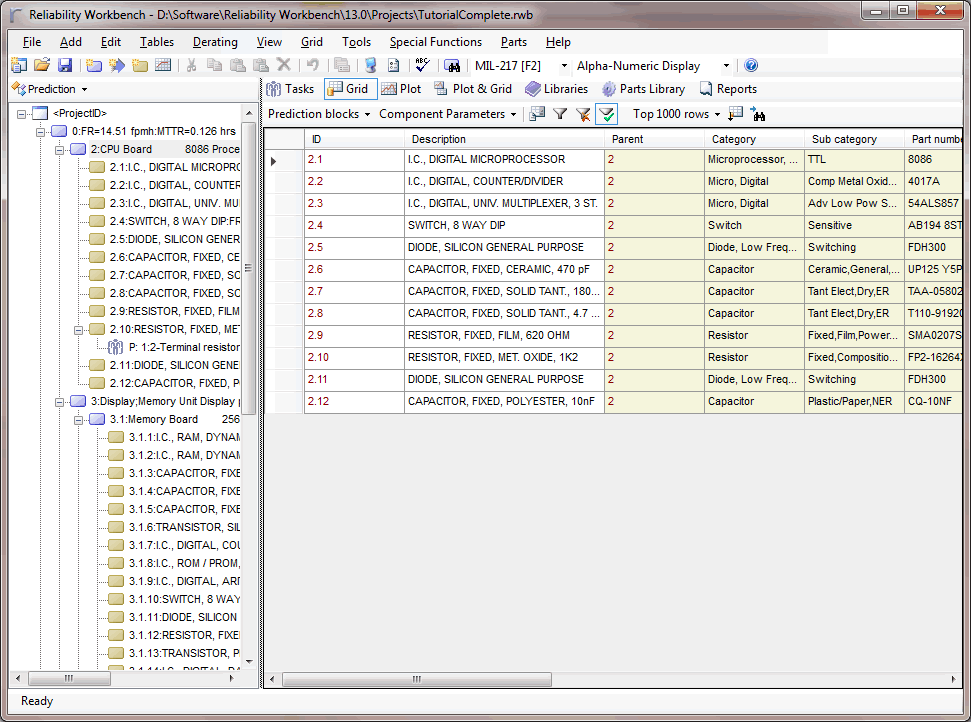

Telcordia SR-332 in Reliability Workbench software provides reliability prediction of commercial electronic components. Telcordia Sr 332 Handbook 44 Field. According to the handbook. MIL-HDBK-217 is much higher than the result in Bellcore/Telcordia SR-332.

The effect of these shortcomings on the predictions obtained from MIL-HDBK-217 and similar methodologies are then demonstrated through a review of case studies. Lastly, this paper briefly reviews RIAC 217 Plus and identifies the shortcomings of this methodology.

HISTORY Attempts to test and quantify the reliability of electronic components began in the 1940s during World War II. During this period, electronic tubes were the most failure-prone components used in electronic systems (McLinn, 1990; Denson, 1998).

These failures led to various studies and the creation of ad hoc groups to identify ways in which the reliability of electronic systems could be improved. One of these groups concluded that in order to improve performance, the reliability of the components needed to be verified by testing before full-scale production. The specification of reliability requirements, in turn, led to a need for a method to estimate reliability before the equipment was built and tested. This step was the inception of reliability prediction for electronics.

By the 1960s, spurred on The authors are at the Center for Advanced Life Cycle Engineering at the University of Maryland. By Cold War events and the space race, reliability prediction and environmental testing became a full-blown professional discipline (Caruso, 1996). The first dossier on reliability prediction was released by Radio Corporation of America (RCA); it was called TR-1100, Reliability Stress Analysis for Electronic Equipment. RCA was one of the major manufacturers of electronic tubes (Saleh and Marais, 2006). The report presented mathematical models for estimating component failure rates and served as the predecessor of what would become the standard and a mandatory requirement for reliability prediction in the decades to come, MIL-HDBK-217.

The methodology first used in MIL-HDBK-217 was a point estimate of the failure rate, which was estimated by fitting a line through field failure data. Soon after its introduction, all reliability predictions were based on this handbook, and all other sources of failure rates, such as those from independent experiments, gradually disappeared (Denson, 1998). The failure to use these other sources was partly due to the fact that MIL-HDBK-217 was often a contractually cited document, leaving contractors with little flexibility to use other sources. Around the same time, a different approach to reliability estimation that focused on the physical processes by which components were failing was initiated.

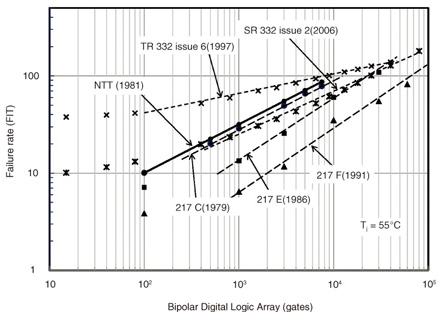

This approach would later be termed “physics of failure.” The first symposium on this topic was sponsored by the Rome Air Development Center (RADC) and the IIT Research Institute (IITRI) in 1962. These two—regression analysis of failure data and reliability prediction through physics of failure—seemed to be diverging, with “the system engineers devoted to the tasks of specifying, allocating, predicting, and demonstrating reliability, while the physics-of-failure engineers and scientists were devoting their efforts to identifying and modeling the physical causes of failure” (Denson, 1998, p. The result of the push toward the physics-of-failure approach was an effort to develop new models for reliability prediction for MIL-HDBK-217. These new methods were dismissed as being too complex and unrealistic. So, even though the RADC took over responsibility for preparing MIL-HDBK-217B, the physics-of-failure models were not incorporated into the revision. As shown in, over the course of the 1980s, MIL-HDBK-217 was updated several times, often to include newer components and more complicated, denser microcircuits. The failure rates, which were originally estimated for electronic tubes, now had to be updated to account for the complexity of devices.

As a result, the MIL-HDBK-217 prediction methodology evolved to assume that times to failures were exponentially distributed and used mathematical curve fitting to arrive at a generic constant failure rate for each component type. Other reliability models using the gate and transistor counts of microcircuits as a measure of their complexity were developed in the late 1980s. Of MIL-HDBK-217.

Hence, with the automotive and telecommunication industries adopting methodologies similar to MIL-HDBK-217, the handbook’s practices proliferated in the commercial sector. While this was happening, there were also several researchers who had experimental data to show that the handbook-based methodologies were fundamentally flawed in their assumptions. However, these were often either explained away as being anomalies or labeled as invalid. By the 1990s, the handbooks were struggling to keep up with new components and technological advancements. In 1994, there was another major development in the initiation of the U.S. Military Specification and Standards Reform (MSSR).

The MSSR identified MIL-HDBK-217 as the only standard that “required priority action as it was identified as a barrier to commercial processes as well as major cost drivers in defense acquisitions” (Denson, 1998, p. Despite this, no final model was developed to supplement or replace MIL-HDBK-217 in the 1990s. Instead, a final revision was made to the handbook in the form of MIL-HDBK-217F in 1995. At this stage, the handbook-based methodologies were already outdated in their selection of components and the nature of failures considered.

In the 1990s, the electronic systems were vastly more complicated and sophisticated than they had been in the 1960s, when the handbook was developed. Failure rates were no longer determined by components, but rather by system-level factors such as manufacturing, design, and software interfaces. Based on the new understanding of the critical failure mechanisms in systems and the physics underlying failures, MIL-HDBK-217 was found to be completely incapable of being applied to systems to predict system reliability.

Moving forward into the 2000s and up through 2013, methodologies based on MIL-HDBK-217 were still being used in the industry to predict reliability and provide such metrics as mean time to failure and mean time between failures (MTTF and MTBF). These metrics are still used as estimates of reliability, even though both the methodologies and the database of failure rates used to evaluate the metrics are outdated. The typical feature size when MIL-HDBK-217 was last updated was of the order of 500 nm, while commercially available electronic packages today have feature sizes of 22 nm (e.g., Intel Core i7 processor). Furthermore, many components, both active and passive, such as niobium capacitors and insulated gate bipolar transistors (IGBTs), which are now common, had not been invented at the time of the last MIL-HDBK-217 revision. Needless to say, the components and their use conditions, the failure modes and mechanisms, and the failure rates for today’s systems are vastly different from the components for which MIL-HDBK-217 was developed.

Hence, the continued application of these handbook methodologies by the industry is misguided and misleading to customers and designers alike. Solution is not to develop an updated handbook like the RIAC 217 Plus, but rather to concede that reliability cannot be predicted by deterministic models. The use of methodologies based on MIL-HDBK-217 has proven to be detrimental to the reliability engineering community as a whole. MIL-HDBK-217 AND ITS PROGENY In this section, we review the different standards and reliability prediction methodologies, comparing them to the latest draft of IEEE 1413.1, Guide for Developing and Assessing Reliability Predictions Based on IEEE Standard 1413 (Standards Committee of the IEEE Reliability, 2010). Most handbook-based prediction methodologies can be traced back to MIL-HDBK-217 and are treated as its progeny.

As mentioned above, MIL-HDBK-217 was based on curve fitting a mathematical model to historical field failure data to determine the constant failure rate of parts. Its progeny also use similar prediction methods, which are based purely on fitting a curve through field or test failure data. These methodologies, much like MIL-HDBK-217, use some form of a constant failure rate model: they do not consider actual failure modes or mechanisms. Hence, these methodologies are only applicable in cases where systems or components exhibit relatively constant failure rates. Lists some of the standards and prediction methodologies that are considered to be the progeny of MIL-HDBK-217. TABLE D-2 MIL-HDBK-217-Related Reliability Prediction Methodologies and Applications Procedural Method Applications Status MIL-HDBK-217 Military Active Telcordia SR-332 Telecom Active CNET Ground Military Canceled RDF-93 and 2000 Civil Equipment Active SAE Reliability Prediction Automotive Canceled British Telecom HRD-5 Telecom Canceled Siemens SN29500 Siemens Products Canceled NTT Procedure Commercial and Military Canceled PRISM Aeronautic and Military Active RIAC 217Plus Aeronautic and Military Active FIDES Aeronautic and Military Active.

In most cases, the failure rate relationship that is used by these handbook techniques (adapted from MIL-HDBK-217) takes the form of λ p = f( λ G, π i) where λ p is the calculated constant part failure rate; λ G is a constant part failure rate (also known as base failure rate), which is provided by the handbook; and π i is a set of adjustment factors for the assumed constant failure rates. All of these handbook methods either provide a constant failure rate or a method to calculate it.

The handbook methods that calculate constant failure rates use one or more multiplicative adjustment factors (which may include factors for part quality, temperature, design, or environment) to modify a given constant base failure rate. The constant failure rates in the handbooks are obtained by performing a linear regression analysis on the field data. The aim of the regression analysis is to quantify the expected theoretical relationship between the constant part failure rate and the independent variables.

The first step in the analysis is to examine the correlation matrix for all variables, showing the correlation between the dependent variable (the constant failure rate) and each independent variable. The independent variables used in the regression analysis typically include such factors as the device type, package type, screening level, ambient temperature, and application stresses. The second step is to apply stepwise multiple linear regressions to the data, which express the constant failure rate as a function of the relevant independent variables and their respective coefficients. This is the step that involves the evaluation of the above π factors. The constant failure rate is then calculated using the regression formula and the input parameters.

The regression analysis does not ignore data entries that lack essential information, because the scarcity of data necessitates that all available data be used. To accommodate such data entries in the regression analysis, a separate “missing” category may be constructed for each potential factor when the required information is not available. A regression factor can be calculated for each “missing” category, considering it a unique operational condition. If the coefficient for the unknown category is significantly smaller than the next lower category or larger than the next higher category, then that factor in question cannot be quantified by the available data, and additional data are required before the factor can be fully evaluated (Standards Committee of the IEEE Reliability, 2010). A constant failure rate model for non-operating conditions can be extrapolated by eliminating all operation-related stresses from the handbook prediction models, such as temperature rise or electrical stress ratio. Following the problems related to the use of missing data, using handbooks such as MIL-HDBK-217 to calculate constant non-operating failure rates is an extrapolation of the empirical relationship of the source field data beyond the range in which it was gathered.

In other words, the. TELCORDIA SR-332 Telcordia SR-332 is a reliability prediction methodology developed by Bell Communications Research (or Bellcore) primarily for telecommunications companies (Telcordia Technologies, 2001). The most recent revision of the methodology is Issue 3, dated January 2011. The stated purpose of Telcordia SR-332 is “to document the recommended methods for predicting device and unit hardware reliability (and also) for predicting serial system hardware reliability” (Telcordia Technologies, 2001, p. The methodology is based on empirical statistical modeling of commercial telecommunication systems whose physical design, manufacture, installation, and reliability assurance practices meet the appropriate Telcordia (or equivalent) generic and system-specific requirements.

In general, Telcordia SR-332 adapts the equations in MIL-HDBK-217 to represent the conditions that telecommunication equipment experience in the field. Results are provided as a constant failure rate, and the handbook provides the upper 90 percent confidence-level point estimate for the constant failure rate. The main concepts in MIL-HDBK-217 and Telcordia SR-332 are similar, but Telcordia SR-332 also has the ability to incorporate burn-in, field, and laboratory test data for a Bayesian analytical approach that incorporates both prior information and observed data to generate an updated posterior distribution. For example, Telcordia SR-332 contains a table of the “first-year multiplier” (Telcordia Technologies, 2001, p. 2-2), which is the predicted ratio of the number of failures of a part in its first year of operation in the field to the number of failures of the part in another year of (steady state) operation.

This table in the SR-332 contains the first-year multiplier for each value of the part device burn-in time in the factory. The part’s total burn-in time is the sum of the burn-in time at the part, unit, and system levels. PRISM PRISM is a reliability assessment method developed by the Reliability Analysis Center (RAC) (Reliability Assessment Center, 2001).

The method is available only as software, and the most recent version of the software is Version 1.5, released in May 2003. PRISM combines the empirical data of users with a built-in database using Bayesian techniques. In this technique, new data are combined using a weighted average method, but there is no new regression analysis. PRISM includes some nonpart factors such as interface, software, and mechanical problems.

PRISM calculates assembly- and system-level constant failure rates in accordance with similarity analysis, which is an assessment method that compares the actual life-cycle characteristics of a system with predefined. Operating in severe environments (defense systems, aeronautics, industrial electronics, and transport). The FIDES Guide also aims to provide a concrete tool to develop and control reliability” (FIDES Group, 2009). The FIDES Guide contains two parts: a reliability prediction model and a reliability process control and audit guide. The FIDES Guide provides models for electrical, electronic, and electromechanical components. These prediction models take into account the electrical, mechanical, and thermal overstresses.

These models also account for “failures linked to development, production, field operation and maintenance” (FIDES Group, 2009). The reliability process control guide addresses the procedures and organizations throughout the life cycle, but does not go into the use of the components themselves. The audit guide is a generic procedure that audits a company using three questions as a basis to “measure its capability to build reliable systems, quantify the process factors used in the calculation models, and identify actions for improvement” (FIDES Group, 2009). NON-OPERATING CONSTANT FAILURE RATE PREDICTIONS MIL-HDBK-217 does not have specific methods or data related to the non-operational failure of electronic parts and systems, although several different methods to estimate them were proposed in the 1970s and 1980s. The first methods used multiplicative factors based on the operating constant failure rates obtained using other handbook methods.

The reported values of such multiplicative factors are 0.03 or 0.1. The first value of 0.03 was obtained from an unpublished study of satellite clock failure data from 23 failures. The value of 0.1 is based on a RADC study from 1980.

RAC followed up the efforts with the RADC-TR-85-91 method. This method was described as being equivalent to MIL-HDBK-217 for non-operating conditions, and it contained the same number of environmental factors and the same type of quality factors as the then-current MIL-HDBK-217. Some other non-operating constant failure rate tables from the 1970s and 1980s include the MIRADCOM Report LC-78-1, RADC-TR-73-248, and NONOP-1.

IEEE 1413 AND COMPARISON OF RELIABILITY PREDICTION METHODOLOGIES The IEEE Standard 1413, IEEE Standard Methodology for Reliability Prediction and Assessment for Electronic Systems and Equipment (IEEE Standards Association, 2010), provides a framework for reliability prediction procedures for electronic equipment at all levels. It focuses on hardware reliability prediction methodologies, and specifically excludes.

Software reliability, availability and maintainability, human reliability, and proprietary reliability prediction data and methodologies. IEEE 1413.1, Guide for Selecting and Using Reliability Predictions Based on IEEE1413 (IEEE Standards Association, 2010) aids in the selection and use of reliability prediction methodologies that satisfy IEEE 1413. Shows a comparison of some of the handbook-based reliability prediction methodologies based on the criteria in IEEE 1413 and 1413.1. Though only five of the many failure prediction methodologies have been analyzed, they are representative of the other constant failure-rate-based techniques. There have been several publications that assess other similar aspects of prediction methodologies.

Examples include O’Connor (1985a, 1985b, 1988, 1990), O’Connor and Harris (1986), Bhagat (1989), Leonard (1987, 1988), Wong (1990, 1993, 1989), Bowles (1992), Leonard and Pecht (1993), Nash (1993), Hallberg (1994), and Lall et al. These methodologies do not identify the root causes, failure modes, and failure mechanisms.

Therefore, these techniques offer limited insight into the real reliability issues and could potentially misguide efforts to design for reliability, as is demonstrated in Cushing et al. (1996), Hallberg (1987, 1991), Pease (1991), Watson (1992), Pecht and Nash (1994), and Knowles (1993). The following sections will review some of the major shortcomings of handbook-based methodologies, and also present case studies highlighting the inconsistencies and inaccuracies of these approaches. SHORTCOMINGS OF MIL-HDBK-217 AND ITS PROGENY MIL-HDBK-217 has several shortcomings, and it has been critiqued extensively since the early 1960s. Some of the initial arguments and results contradicting the handbook methodologies were refuted as being fraudulent or sourced from manipulated data (see McLinn, 1990). However, by the early 1990s, it was agreed that MIL-HDBK-217 was severely limited in its capabilities, as far as reliability prediction was concerned (Pecht and Nash, 1994).

One of the main drawbacks of MIL-HDBK-217 was that the predictions were based purely on “simple heuristics,” as opposed to engineering design principles and physics of failure. The handbook could not even account for different loading conditions (Jais et al., 2013). Furthermore, because the handbook was focused mainly on component-level analyses, it could only address a fraction of overall system failure rates. In addition to these issues, if MIL-HDBK-217 was only used for arriving at a rough estimate of reliability of a component, then it would need to be constantly updated with the newer technologies, but this was never the case. TABLE D-4 Comparison of Reliability Prediction Methodologies Questions for Comparison MIL-HDBK-217F Telcordia SR-332 217Plus PRISM FIDES Does the methodology identify the sources used to develop the prediction methodology and describe the extent to which the source is known?

Yes Yes Yes Yes Yes Are assumptions used to conduct the prediction according to the methodology identified, including those used for the unknown data? Yes Yes Yes Yes Yes (must pay for modeling software). Are sources of uncertainty in the prediction results identified? No Yes Yes No Yes Are limitations of the prediction results identified? Yes Yes Yes Yes Yes Are failure modes identified? No No No No Yes, the failure mode profile varies with the life profile. Are failure mechanisms identified?

No No No No Yes Are confidence levels for the prediction results identified? No Yes Yes No No Does the methodology account for life-cycle environmental conditions, including those encountered during (a) product usage (including power and voltage conditions), (b) packaging, (c) handling, (d) storage, (e) transportation, and (f) maintenance conditions? It does not consider the different aspects of environment. There is a temperature factor π T and an environment factor π E in the prediction equation. Yes, for normal use life of the product from early life to steady-state operation over the normal product life. It considers all of the life-cycle environmental conditions.

Does the methodology account for materials, geometry, and architectures that comprise the parts? No No No No Yes, when relevant materials, geometry and such are considered in each part model. Does the methodology account for part quality? Quality levels are derived from specific part-dependent data and the number of the manufacturer screens the part goes through. Four quality levels that are based on generalities regarding the origin and screening of parts. Quality is accounted for in the part quality process grading factor. Part quality level is implicitly addressed by process grading factors and the growth factor, p G.

Yes Does the methodology allow incorporation of reliability data and experience? No Yes, through Bayesian method of weighted averaging. Yes, through Bayesian method of weighed averaging Yes, through Bayesian method of weighted averaging. This can be done independently of the prediction methodology used.

Input data required for the analysis Information on part count and operational conditions (e.g., temperature, voltage; specifics depend on the handbook used). Other requirements for performing the analysis Effort required is relatively small for using the handbook method and is limited to obtaining the handbook. What is the coverage of electronic parts? Extensive Extensive Extensive Extensive Extensive What failure probability distributions are supported? Exponential Exponential Exponential Exponential Phase Contributions SOURCE: Adapted from IEEE 1413.1.

Incorrect Assumption of Constant Failure Rates MIL-HDBK-217 assumes that the failure rates of all electronic components are constant, regardless of the nature of actual stresses that the component or system experiences. This assumption was first made based on statistical consideration of failure data independent of the cause or nature of failures. Since then, significant developments have been made in the understanding of the physics of failure, failure modes, and failure mechanisms. It is now understood that failure rates, and, more specifically, the hazard rates (or instantaneous failure rates), vary with time. Studies have shown that the hazard rates for electronic components, such as transistors, that are subjected to high temperatures and high electric fields, under common failure mechanisms, show an increasing hazard rate with time (Li et al., 2008; Patil et al., 2009).

At the same time, in components and systems with manufacturing defects, failures may manifest themselves early on, and as these parts fail, they get screened. Therefore, a decreasing failure rate may be observed initially in the life of a product. Hence, it is safe to say that in the life cycle of an electronic component or system, the failure rate is constantly varying. McLinn (1990) and Bowles (2002) describe the history, math, and flawed reasoning behind constant failure rate assumptions. Epstein and Sobel (1954) provide a historical review of some of the first applications of the exponential distribution to model mortality in actuarial studies for the insurance industry in the early 1950s.

Since exponential distributions are associated with constant failure rates, which help simplify calculations, they were adopted by the reliability engineering community. Through subsequent widespread usage, “the constant failure rate model, right or wrong, became the ‘reliability paradigm’” (McLinn, 1990, p. McLinn notes how once this paradigm was adopted, its practitioners, based on their common beliefs, “became committed more to the propagation of the paradigm, than the accuracy of the paradigm itself” (p. By the end of the 1950s, and in the early 1960s, more test data were obtained from experiments. The data seemed to indicate that electronic systems at that time had decreasing failure rates (Milligan, 1961; Pettinato and McLaughlin, 1961). However, the natural tendency of the proponents of the constant failure rate model was to explain away these results as anomalies as opposed to providing a “fuller explanation” (McLinn, 1990, p. Concepts such as inverse burn-in or endless burn-in (Bezat and Montague, 1979; McLinn, 1989) and mysterious unexplained causes were used to dismiss anomalies.

Proponents of the constant failure rate model believed that the hazard rates or instantaneous failure rates of electronic systems would follow a. Bathtub curve—with an initial region (called infant mortality) of decreasing failure rate when failures due to manufacturing defects would be weeded out.

This stage would then be followed by a region of a constant failure rate, and toward the end of the life cycle the failure rate would increase due to wear-out mechanisms. This theory would help reconcile both the decreasing failure rate and the constant failure rate model. When Peck (1971) published data from semiconductor testing, he observed a decreasing failure rate trend that lasted for many thousands of hours of operation. This was said to have been caused by “freaks.” It was later explained as being an extended infant mortality rate. Bellcore and SAE created two standards using a prediction methodology based on constant failure rate, but they subsequently adjusted their techniques to account for this phenomenon (of decreasing failure rates lasting several thousand hours) by increasing the infant mortality region to 10,000 hours and 100,000 hours, respectively.

However, the bathtub curve theory was further challenged by Wong (1981) with his work describing the demise of the bathtub curve. Claims were made by constant failure rate proponents suggesting that data challenging the bathtub curve and constant failure rate models were fraudulently manipulated (Ryerson, 1982). These allegations were merely asserted with no supporting analysis or explanation.

In order to reconcile some of the results from contemporary publications, the roller coaster curve—which was essentially a modified bathtub curve—was introduced by Wong and Lindstrom (1989). McLinn (1990, p. 239) noted that the arguments and modifications made by the constant failure rate proponents “were not always based on science or logic but may be unconsciously based on a desire to adhere to the old and familiar models.” depicts the bathtub curve and the roller coaster curve. There has been much debate about the suitability of the constant failure rate assumption for modeling component reliability. This methodology has been controversial in terms of assessing reliability during design: see, for FIGURE D-1 The bathtub curve (left) and the roller coaster curve (right). See text for discussion.

Example, Blanks (1980), Bar-Cohen (1988), Coleman (1992), and Cushing et al. The mathematical perspective has been discussed in detail in Bowles (2002). Thus, a complete understanding of how the constant failure rates are evaluated, along with the implicit assumptions, is vital to interpreting both reliability predictions and future design. It is important to remember that the constant failure rate models used in some of the handbooks are calculated by performing a linear regression analysis on the field failure data or generic test data. These data and the constant failure rates are not representative of the actual failure rates that a system might experience in the field (unless the environmental and loading conditions are static and the same for all devices). Because a device might see several different types of stresses and environmental conditions, it could be degrading in multiple ways. Hence, the lifetime of an electronic compound or device can be approximated to be a combination of several different failure mechanisms and modes, each having its own distribution, as shown in.

Furthermore, this degradation is nondeterministic, so the product will have differing failure rates throughout its life. It would be impossible to capture this behavior in a constant failure rate model. Therefore, all methodologies based on the assumption of a constant failure rate are fundamentally flawed and cannot be used to predict reliability in the field. FIGURE D-2 The physics-of-failure perspective on the bathtub curve and failure rates. See text for discussion. Lack of Consideration of Root Causes of Failures, Failure Modes, and Failure Mechanisms After the assumption of constant failure rates, the lack of any consideration of root causes of failures and failure modes and mechanisms is another major drawback of MIL-HDBK-217 and other similar approaches. Without knowledge or understanding of the site, root cause, or mechanism of failures, the load and environment history, the materials, and the geometries, the calculated failure rate is meaningless in the context of reliability prediction.

As noted above, not only does this undermine reliability assessment in general, it also obstructs product design and process improvement. Cushing et al.

(1993) note that there are two major consequences of using MIL-HDBK-217. First, this prediction methodology “does not give the designer or manufacturer any insight into, or control over, the actual causes of failure since the cause-and-effect relationships impacting reliability are not captured. Yet, the failure rate obtained is often used as a reverse engineering tool to meet reliability goals.” At the same time, “MIL-HDBK-217 does not address the design & usage parameters that greatly influence reliability, which results in an inability to tailor a MIL-HDBK-217 prediction using these key parameters” (Cushing et al., 1993, p.

In countries such as Japan (see Kelly et al., 1995), Singapore, and Taiwan, where the focus is on product improvement, physics of failure is the only approach used for reliability assessment. In stark contrast, in the United States and Europe, focus on “quantification of reliability and device failure rate prediction has been more common” (Cushing et al., 1993, p.

This approach has turned reliability assessment into a numbers game, with greater importance being given to the MTBF value and the failure rate than to the cause of failure. The reason most often cited for this rejection of physics-of-failure-based approaches in favor of simplistic mathematical regression analysis was the complicated and sophisticated nature of physics-of-failure models. In hindsight, this rejection of physics-based models, without completely evaluating the merits of the approach and without having any foresight, was poor engineering practice. Though MIL-HDBK-217 provides inadequate design guidance, it has often been used in the design of boards, circuit cards, and other assemblies. Research sponsored by the U.S.

Army (Pecht et al., 1992) and the National Institute of Standards and Technology (NIST) (Kopanski et al., 1991) explored an example of design misguidance resulting from device failure rate prediction methodologies concerning the relationship between thermal stresses and microelectronic failure mechanisms. In this case, MIL-HDBK-217 would clearly not be able to distinguish between the two separate failure mechanisms. Results from another independent study by Boeing. (Leonard, 1991) corroborated these findings. The MIL-HDBK-217-based methodologies also cannot be used for comparison and evaluation of competing designs. They cannot provide accurate comparisons or even a specification of accuracy. Physics of failure, in contrast “is an approach to design, reliability assessment, testing, screening and evaluating stress margins by employing knowledge of root-cause failure processes to prevent product failures through robust design and manufacturing practices” (Lall and Pecht, 1993, p.

The physics-of-failure approach to reliability involves many steps, including: (1) identifying potential failure mechanisms, failure modes, and failure sites; (2) identifying appropriate failure models and their input parameters; (3) determining the variability of each design parameter when possible; (4) computing the effective reliability function; and (5) accepting the design, if the estimated time-dependent reliability function meets or exceeds the required value over the required time period. Compares several aspects of MIL-HDBK-217 with those of the physics-of-failure approach. Several physics-of-failure-based models have been developed for different types of materials, at different levels of electronic packaging (chip, component, board), and under different loading conditions (vibration, chemical, electrical). Though it would be impossible to list or review all of them, many models have been discussed in the physics-of-failure tutorial series in IEEE Transactions on Reliability. Examples include Dasgupta and Pecht (1991), Dasgupta and Hu (1992a), Dasgupta and Hu (1992b), Dasgupta (1993), Dasgupta and Haslach (1993), Engel (1993), Li and Dasgupta (1993), Al-Sheikhly and Christou (1994), Li and Dasgupta (1994), Rudra and Jennings (1994), Young and Christou (1994), and Diaz et al.

The physics-of-failure models do have some limitations as well. The results obtained from these models will have a certain degree of uncertainty and errors associated with them, which can be partly mitigated by calibrating them with accelerated testing. The physics-of-failure methods may also be limited in their ability to combine the results of the same model for multiple stress conditions or their ability to aggregate the failure prediction results from individual failure modes to a complex system with multiple competing and common cause failure modes. However, there are recognized methods to address these issues, with continuing research promising improvements; for details, see Asher and Feingold (1984), Montgomery and Runger (1994), Shetty et al. (2002), Mishra et al. (2004), and Ramakrishnan and Pecht (2003).

Despite the shortcomings of the physics-of failure-approach, it is more rigorous and complete, and, hence, it is scientifically superior to the constant failure rate models. The constant failure rate reliability predictions have little relevance to the actual reliability of an electronic system in the. TABLE D-5 A Comparison Between the MIL-HDBK-217 and Physics-of-Failure Approaches Issue MIL-HDBK-217 Physics-of-Failure Approach Model Development Models cannot provide accurate design or manufacturing guidance since they were developed from assumed constant failure-rate data, not root-cause, time-to-failure data.

A proponent stated: “Therefore, because of the fragmented nature of the data and the fact that it is often necessary to interpolate or extrapolate from available data when developing new models, no statistical confidence intervals should be associated with the overall model results” (Morris, 1990). Models based on science/engineering first principles. Models can support deterministic or probabilistic applications.

Device Design Modeling The MIL-HDBK-217 assumption of perfect designs is not substantiated due to lack of root-cause analysis of field failures. MIL-HDBK-217 models do not identify wearout issues. Models for root-cause failure mechanisms allow explicit consideration of the impact that design, manufacturing, and operation have on reliability.

Using 'plc zelio examples' crack, key, serial numbers, registration codes is illegal. You can visit publisher website by clicking Homepage link. Zelio soft example programs. The download file hosted at publisher website. Software piracy is theft. Download Collection.com periodically updates software information from the publisher.

Device Defect Modeling Models cannot be used to (1) consider explicitly the impact of manufacturing variation on reliability, or (2) determine what constitutes a defect or how to screen/inspect defects. Failure mechanism models can be used to (1) relate manufacturing variation to reliability, and (2) determine what constitutes a defect and how to screen/inspect. Device Screening MIL-HDBK-217 promotes and encourages screening without recognition of potential failure mechanisms. Provides a scientific basis for determining the effectiveness of particular screens or inspections. Device Coverage Does not cover new devices for approximately the first 5–8 years.

Some devices, such as connectors, were not updated for more than 20 years. Developing and maintaining current design reliability models for devices is an impossible task. Generally applicable—applies to both existing and new devices—since failure mechanisms are modeled, not devices. Thirty years of reliability physics research has produced and continues to produce peer-reviewed models for the key failure mechanisms applicable to electronic equipment.

Telcordia Sr 332

Automated computer tools exist for printed wiring boards and microelectronic devices. Issue MIL-HDBK-217 Physics-of-Failure Approach Use of Arrhenius Model Indicates to designers that steady-state temperature is the primary stress that designers can reduce to improve reliability. MIL-HDBK-217 models will not accept explicit temperature change inputs. MIL-HDBK-217 lumps different acceleration models from various failure mechanisms together, which is unsound. The Arrhenius model is used to model the relationships between steady-state temperature and mean time-to-failure for each failure mechanism, as applicable.

In addition, stresses due to temperature change, temperature rate of change, and spatial temperature gradients are considered, as applicable. Operating Temperature Explicitly considers only steady-state temperature.

The effect of steady-state temperature is inaccurate because it is not based on root-cause, time-to-failure data. The appropriate temperature dependence of each failure mechanism is explicitly considered. Reliability is frequently more sensitive to temperature cycling, provided that adequate margins are given against temperature extremes (see Pecht et al., 1992). Operational Temperature Cycling Does not support explicit consideration of the impact of temperature cycling on reliability.

No way of superposing the effects of temperature cycling and vibration. Explicitly considers all stresses, including steady-state temperature, temperature change, temperature rate of change, and spatial temperature gradients, as applicable to each root-cause failure mechanism. Input Data Required Does not model critical failure contributors, such as materials architectures, and realistic operating stresses. Minimal data in, minimal data out.

Information on materials, architectures, and operating stresses—the things that contribute to failures. This information is accessible from the design and manufacturing processes of leading electronics companies. Output Data Output is typically a (constant) failure rate ‘ λ’. A proponent stated: “MIL-HDBK-217 is not intended to predict field reliability and, in general, does not do a very good job in an absolute sense” (Morris, 1990).

Provides insight to designers on the impact of materials, architectures, loading, and associated variation. Predicts the time-to-failure (as a distribution) and failure sites for key failure mechanisms in a device or assembly.

These failure times and sites can be ranked. This approach supports either deterministic or probabilistic treatment. Issue MIL-HDBK-217 Physics-of-Failure Approach DoD/Industry Acceptance Mandated by government; 30-year record of discontent. Not part of the U.S. Air Force Avionics Integrity Program (AVIP). No longer supported by senior U.S. Army leaders.

Represents the best practices of industry. Coordination Models have never been submitted to appropriate engineering societies and technical journals for formal peer review. Models for root-cause failure mechanisms undergo continuous peer review by leading experts. New software and documentation are coordinated with the various DoD branches and other entities. Relative Cost of Analysis Cost is high compared with value added. Can misguide efforts to design reliable electronic equipment.

Intent is to focus on root-cause failure mechanisms and sites, which is central to good design and manufacturing. Acquisition flexible, so costs are flexible. The approach can result in reduced life-cycle costs due to higher initial and final reliabilities, reduced probability of failing tests, reductions in hidden factory, and reduced support costs.

SOURCE: Cushing et al. Reprinted with permission. The weaknesses of the physics-of-failure approach can mostly be attributed to the lack of knowledge of the exact usage environment and loading conditions that a device might experience and the stochastic nature of the degradation process. However, with the availability of various sensors for data collection and data transmission, this gap in knowledge is being overcome. The weaknesses of the physics-of-failure approach can also be overcome by augmenting it with prognostic and health management approaches, such as the one shown in (see Pecht and Gu, 2009). The process based on prognostics and health management does not predict reliability, but it does provide a reliability assessment based on in-situ monitoring of certain environmental or performance parameters.

This process combines the strengths of the physics-of-failure approach with live monitoring of the environment and operational loading conditions. FIGURE D-3 Physics-of-failure-based prognostics and health management approach. For details, see Pecht and Gu (2009, p. SOURCE: Adapted from Gu and Pecht (2007). Reprinted with permission. Inadequacy for System-Level Considerations The MIL-HDBK-217 methodology is predominantly focused on component-level reliability prediction, not system-level reliability.

This focus may not have been unreasonable in the 1960s and 1970s, when components had higher failure rates and electronic systems were less complex than they are today. However, as Denson (1988) notes, an increase in system complexity and component quality has resulted in a shift of system-failure causes away from components toward system-leve.